TL;DR- We introduces CoMo, a novel framework for compositional motion customization in text-to-video generation, enabling the synthesis of multiple, distinct motions within a single video.

Abstract

While recent text-to-video models excel at generating diverse scenes, they struggle with precise motion control, particularly for complex, multi-subject motions. Although methods for single-motion customization have been developed to address this gap, they fail in compositional scenarios due to two primary challenges: motion-appearance entanglement and ineffective multi-motion blending . This paper introduces CoMo, a novel framework for compositional motion customization in text-to-video generation, enabling the synthesis of multiple, distinct motions within a single video. CoMo addresses these issues through a two-phase approach. First, in the single-motion learning phase, a static-dynamic decoupled tuning paradigm disentangles motion from appearance to learn a motion-specific module. Second, in the multi-motion composition phase, a plug-and-play divide-and-merge strategy composes these learned motions without additional training by spatially isolating their influence during the denoising process. To facilitate research in this new domain, we also introduce a new benchmark and a novel evaluation metric designed to assess multi-motion fidelity and blending. Extensive experiments demonstrate that CoMo achieves state-of-the-art performance, significantly advancing the capabilities of controllable video generation.

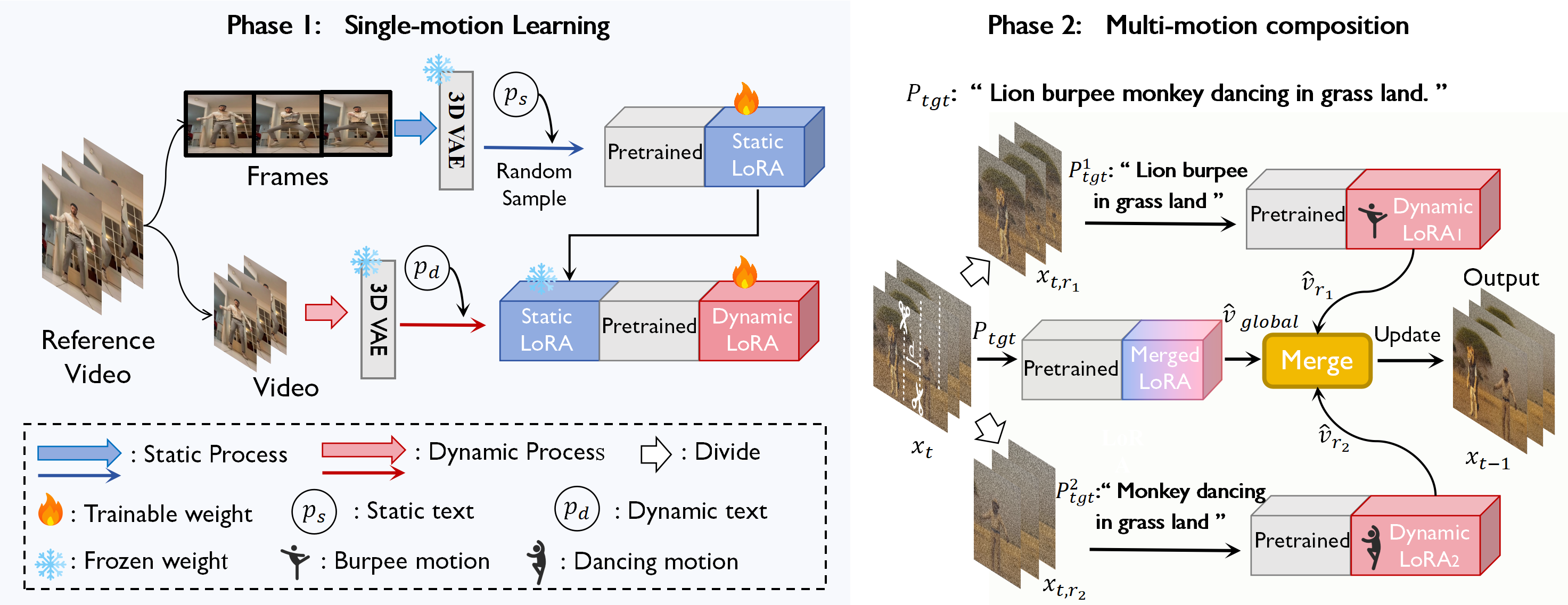

An overview of our proposed CoMo framework

Our method consists of two phase:

Decoupled single-motion learning – we

first train a static LoRA module on random frame to learn the appearance of the reference video.

Then, we freeze the static LoRA and train a dynamic LoRA module on the complete video to exclusively capture its motion patterns.

focusing solely on learning appearance.

plug-and-play multi-motion composition – we introduce a divide-and-merge strategy for

compositional motion generation, while all the weights are frozen.

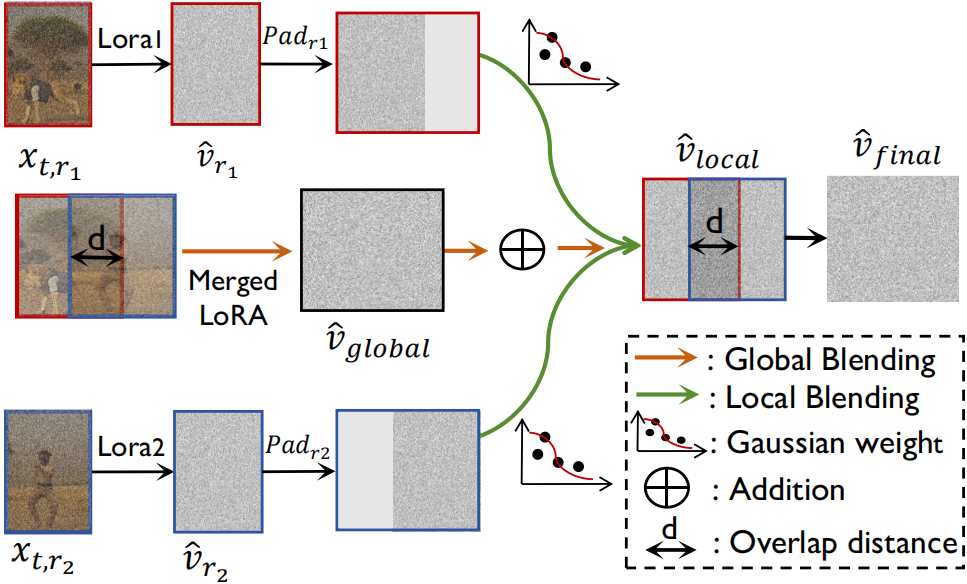

Merge process

The final velocity prediction is generated by merging regional velocities with a Gaussian Smooth Transition for local blending, followed by a Global Blending step to ensure overall consistency.